Unity Catalog Create Table

Unity Catalog Create Table - See work with managed tables. The cli tool allows users to interact with a unity catalog server to create and manage catalogs, schemas, tables across different formats, volumes with unstructured data, functions, ml and. They always use delta lake. To create a new schema in the catalog, you must have the create schema privilege on the catalog. Unity catalog makes it easy for multiple users to collaborate on the same data assets. This command has multiple parameters: Unity catalog (uc) is the foundation for all governance and management of data objects in databricks data intelligence platform. Significantly reduce refresh costs by. For apache spark and delta lake to work together with unity catalog, you will need atleast apache spark 3.5.3 and delta lake 3.2.1. In the following examples, replace the placeholder values:. This article describes how to create and refresh materialized views in databricks sql to improve performance and reduce the cost of. The following steps are required to download and. Command to create a new delta table in your unity catalog. Use materialized views in databricks sql. Significantly reduce refresh costs by. When you create a catalog, two schemas (databases). They always use delta lake. To create a catalog, you can use catalog explorer, a sql command, the rest api, the databricks cli, or terraform. The following creates a new table in. Unity catalog managed tables are the default when you create tables in databricks. See work with managed tables. This command has multiple parameters: Sharing the unity catalog across azure databricks environments. Significantly reduce refresh costs by. Use one of the following command examples in a notebook or the sql query editor to create an external table. Unity catalog managed tables are the default when you create tables in databricks. Use one of the following command examples in a notebook or the sql query editor to create an external table. In the following examples, replace the placeholder values:. When you create a catalog, two schemas (databases). The full name of the table, which is a concatenation of. To create a new schema in the catalog, you must have the create schema privilege on the catalog. The cli tool allows users to interact with a unity catalog server to create and manage catalogs, schemas, tables across different formats, volumes with unstructured data, functions, ml and. Significantly reduce refresh costs by. Create catalog and managed table. Unity catalog lets. Contribute to unitycatalog/unitycatalog development by creating an account on github. This command has multiple parameters: Suppose you need to work together on a parquet table with an external client. The cli tool allows users to interact with a unity catalog server to create and manage catalogs, schemas, tables across different formats, volumes with unstructured data, functions, ml and. When you. When you create a catalog, two schemas (databases). The following steps are required to download and. For apache spark and delta lake to work together with unity catalog, you will need atleast apache spark 3.5.3 and delta lake 3.2.1. Suppose you need to work together on a parquet table with an external client. They always use delta lake. For managed tables, unity catalog fully manages the lifecycle and file layout. The following creates a new table in. The full name of the table, which is a concatenation of the. Contribute to unitycatalog/unitycatalog development by creating an account on github. Unity catalog managed tables are the default when you create tables in databricks. To create a new schema in the catalog, you must have the create schema privilege on the catalog. See work with managed tables. This article describes how to create and refresh materialized views in databricks sql to improve performance and reduce the cost of. The cli tool allows users to interact with a unity catalog server to create and manage. Command to create a new delta table in your unity catalog. Publish datasets from unity catalog to power bi directly from data pipelines. In this example, you’ll run a notebook that creates a table named department in the workspace catalog and default schema (database). In the following examples, replace the placeholder values:. You can use an existing delta table in. Significantly reduce refresh costs by. Use materialized views in databricks sql. Unity catalog managed tables are the default when you create tables in databricks. Command to create a new delta table in your unity catalog. For apache spark and delta lake to work together with unity catalog, you will need atleast apache spark 3.5.3 and delta lake 3.2.1. The following steps are required to download and. For apache spark and delta lake to work together with unity catalog, you will need atleast apache spark 3.5.3 and delta lake 3.2.1. To create a new schema in the catalog, you must have the create schema privilege on the catalog. Create catalog and managed table. The following creates a new table. Since its launch several years ago unity catalog has. The following steps are required to download and. This command has multiple parameters: To create a new schema in the catalog, you must have the create schema privilege on the catalog. Update power bi when your data updates: This article describes how to create and refresh materialized views in databricks sql to improve performance and reduce the cost of. To create a table storage format table such as parquet, orc, avro, csv, json, or text, use the bin/uc table create. In the following examples, replace the placeholder values:. You can use an existing delta table in unity catalog that includes a. When you create a catalog, two schemas (databases). Command to create a new delta table in your unity catalog. Use materialized views in databricks sql. Suppose you need to work together on a parquet table with an external client. They always use delta lake. The full name of the table, which is a concatenation of the. The following creates a new table in.Step by step guide to setup Unity Catalog in Azure La data avec Youssef

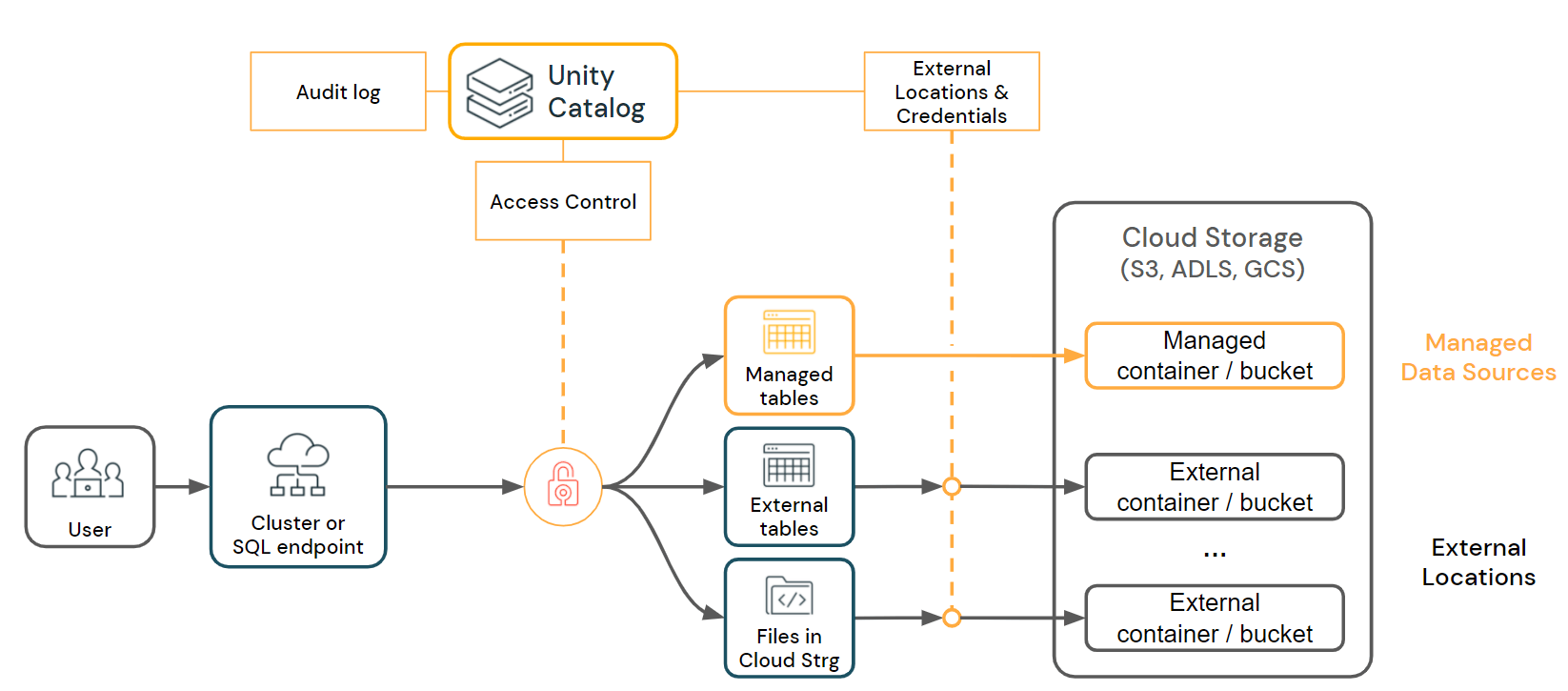

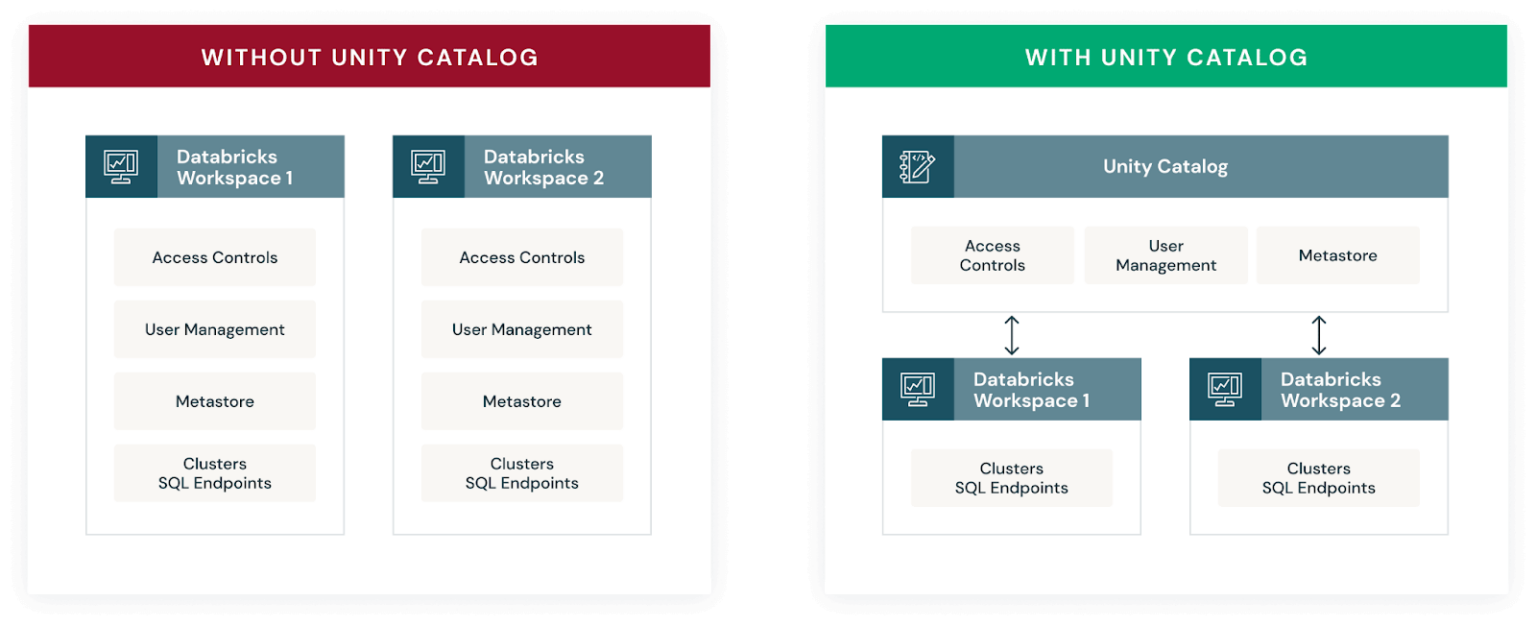

Introducing Unity Catalog A Unified Governance Solution for Lakehouse

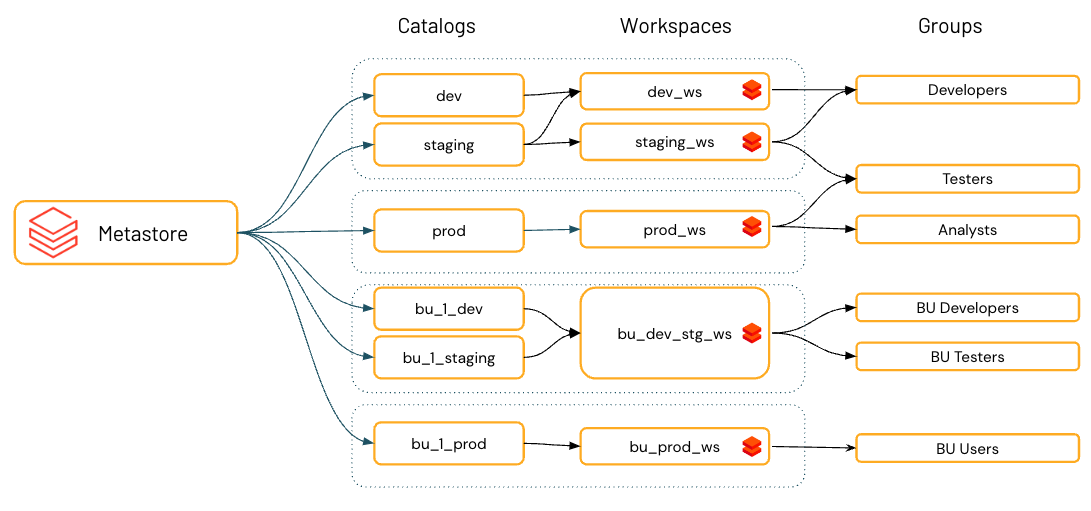

Unity Catalog best practices Azure Databricks Microsoft Learn

Introducing Unity Catalog A Unified Governance Solution for Lakehouse

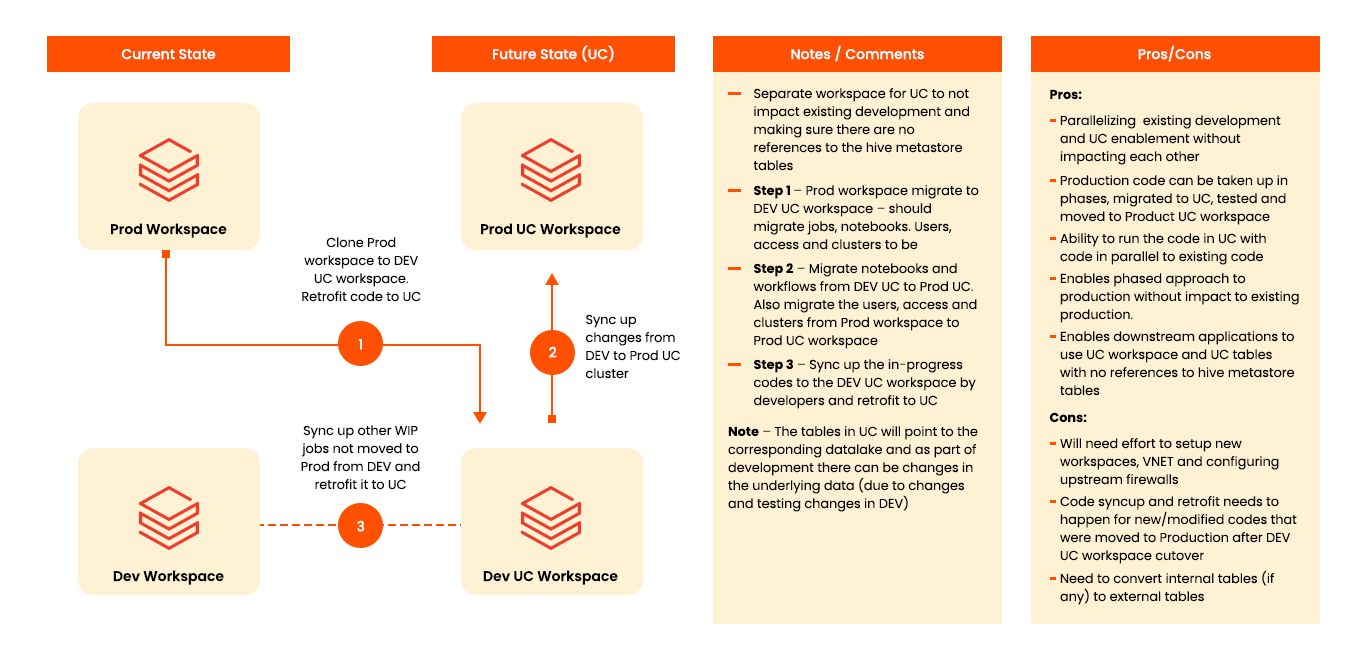

Upgrade Hive Metastore to Unity Catalog Databricks Blog

Unity Catalog Migration A Comprehensive Guide

Ducklake A journey to integrate DuckDB with Unity Catalog Xebia

Demystifying Azure Databricks Unity Catalog Beyond the Horizon...

How to Read Unity Catalog Tables in Snowflake, in 3 Easy Steps

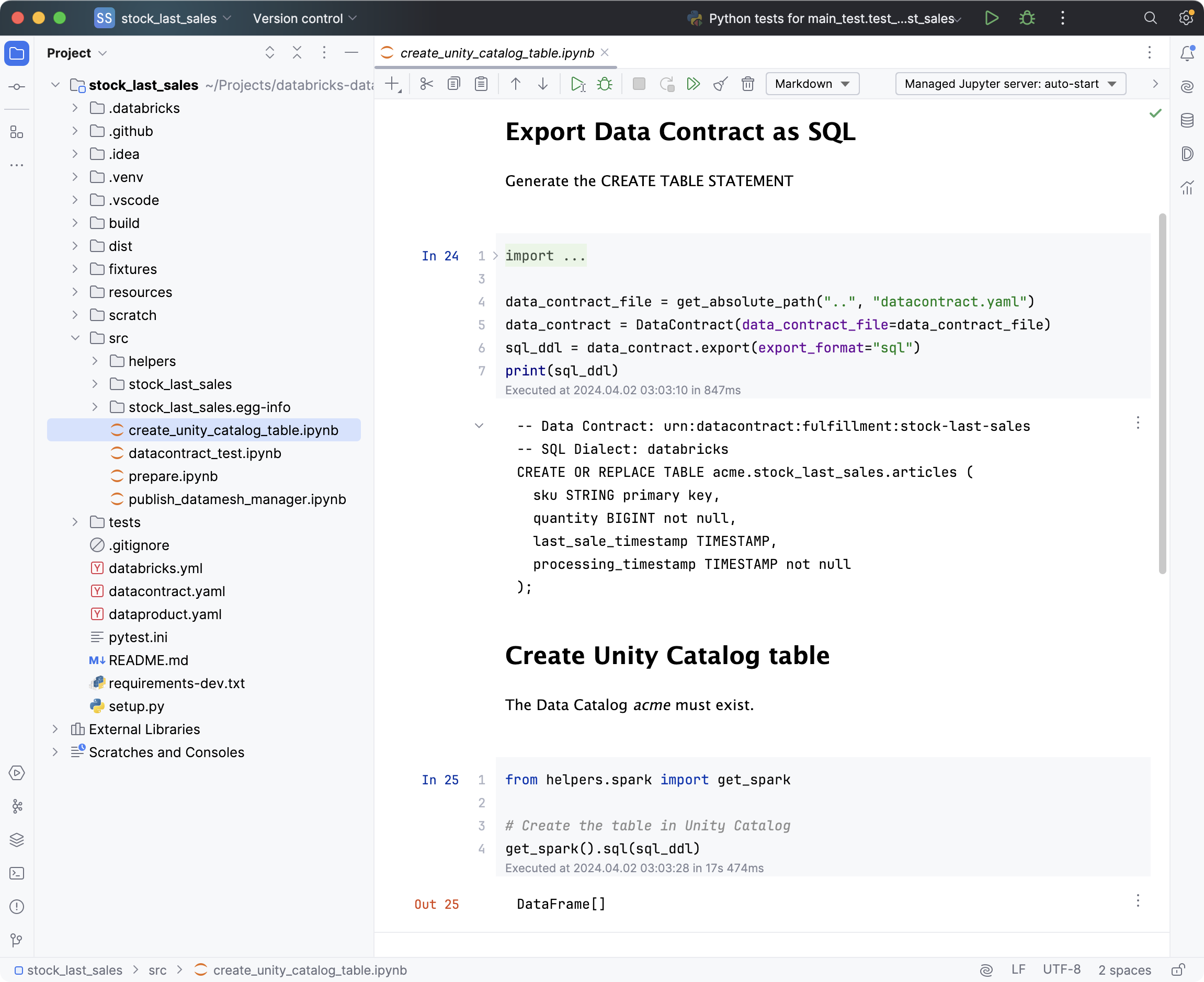

Build a Data Product with Databricks

Use One Of The Following Command Examples In A Notebook Or The Sql Query Editor To Create An External Table.

Unity Catalog Lets You Create Managed Tables And External Tables.

Significantly Reduce Refresh Costs By.

Sharing The Unity Catalog Across Azure Databricks Environments.

Related Post: